Update: If you want more practice data projects, be sure to check out http://www.teamleada.com

In this post, we again use a third party data project taken from Kaggle, a company which hosts data science competitions. Since this was a competition for a prize and not in the interest of learning, users are no longer able to submit their predictions to Kaggle and receive a score. Therefore we thought we would just explain and post our process in participating in this competition. We hope this serves as another didactic example for people to follow along and since we are learners ourselves, we’d appreciate any feedback!

What You Will Learn:

These tutorials are meant for ANYONE interested in learning more about data analytics and is made so that you can follow along even with no prior experience in R. Some background in Statistics would be helpful (making the fancy words seem less fancy) but neither is it necessary. Specifically if you follow through each section of this tutorials series, you will gain experience in the following areas:

- Implementing a machine learning model, namely Classification Trees

- Creating custom functions in R

- Constructing additional features to bolster your model

- Using regular expressions

- How to go from a data question/problem to a solution/prediction!

In Part I we will first breakdown the “March Machine Learning Mania” project and describe the steps to tackling this competition! It’s a little bit more challenging than the Titanic data project, and we’ll do our best to explain everything as concise as possible.

Why You Should Follow Along:

MIT Professor Erik Brynjolfsson likens the impact of Big Data to the invention of the microscope. Where the microscope enabled us to see things too small for the human eye, and what data analytics enables us to do now is see things previously too big. Just imagine the innovation that was spurred from the microscope. To believe in this parallel is to believe that we are coming upon an extremely exciting time!

Hal Varian, Chief Economist at Google, said this about the field of Data Analytics and Data Science:

If you are looking for a career where your services will be in high demand, you should find something where you provide a scarce, complementary service to something that is getting ubiquitous and cheap. So what’s getting ubiquitous and cheap? Data. And what is complementary to data? Analysis. So my recommendation is to take lots of courses about how to manipulate and analyze data: databases, machine learning, econometrics, statistics, visualization, and so on.

Who We Are:

We are recent UC Berkeley grads who studied Statistics (among other things) and realized two things: (1) how essential an understanding of Statistics and Data Analysis was to almost every industry and (2) how teachable these analytic practices could be!

Tips for Following Along

We recommend copying and pasting all code snippets that we have included. While copying and pasting allows you to run the code, you should read through and have an intuitive understanding of what is happening in the code. Our goal isn’t to necessarily teach R syntax, but to provide a sense of the process of digging into data and enable you to use other resources to better learn R.

New to R & RStudio?

No problem! But first go to our post to onboard you in Installing R, RStudio, and Setting Your Working Directory

Kaggle Project: March Machine Learning Mania

To have access to the data project, you also need to become a Kaggle Competitor. Don’t worry, it’s free! Sign up for Kaggle here. You can go directly to the March Madness Competition here. Please take a quick read of the competition summary, data, and evaluation. Unfortunately you cannot submit your model to Kaggle anymore. However they have posted the solutions and you can verify the accuracy of your model for yourself if you would like! We have also included all of the necessary data files here: DropBox With Kaggle Competition Data

The essence of this competition is quite simple. Most of us have probably filled out a bracket in March (whether we watched any of the regular season or not) and went on to ESPN to see which school Bill Simmons or Barack Obama thinks will make it to the Big Dance. Well now you can figure out for yourself whose going to win using data analytics! If you did this perfectly this season, Warren Buffett will given you a billion dollars!.

How to Begin

These competitions can be quite overwhelming, so it is good to break down the steps. In this part, we will go in-depth with each of the following steps:

- Taking a look at the datasets, noting column titles and rows

- Understanding the file you are submitting to Kaggle and more broadly what you are predicting

- Taking a step back now and brainstorming possible predictors!

Step One

Familiarizing Yourself with the Data

The first thing we need to do is download all of the datasets and load them into RStudio. You can download them all here or at this link here. Don’t forget to save them in a folder titled “Kaggle” in your desktop! If you’ve done one of our earlier tutorials, you’ve already made that very folder; the easier thing to do is to rename the existing folder (to say “Kaggle Titanic”) and recreate a new folder named “Kaggle” for the purpose of this tutorial. Also make sure you’ve correctly set up your working directory!

Inputting the Datasets in Rstudio

We again utilize the read.csv() function and set stringsAsFactors = FALSE which sets the columns of our data to be non-categorical and makes them easier to manipulate. Setting header = TRUE keeps the first rows as column titles instead of data.

For the code snippet below you may need to scroll left and right to copy and paste all of the code.

regSeason seasons teams tourneyRes tourneySeeds tourneySlots ``` We use ```head()``` and ```tail()``` functions to get an easy look at what these datasets contain. ```head()``` returns the first six rows and ```tail()``` returns the last six rows of the dataset. Specifically we will look at the two important ones, but you should check out all of them. ```R head(regSeason) tail(regSeason)

What do each of these columns represent? Read for more detail in the Kaggle descriptions. We also provide an easy dictionary below.

- “season” = season organized alphabetically

- “daynum” = Day Number of the season

- “wteam” = Winning Team ID

- “wscore” = Winning Team Score

- “lteam” = Losing Team ID

- “lscore” = Losing Team Score

- “wloc” = Win Location (Home, Away, Neutral)

- “numot” = Number of Overtimes (Not counted until season J)

Now lets take a look at the tournament results dataset.

head(tourneyRes) tail(tourneyRes)

They are for the most part similar column names as those of the regSeason dataset.

Step Two

Understanding the End Goal

Understanding the submission file allows you to frame the dataset you need to create to build your model. At a high level, remember that we need to create two datasets which we will call Train and Test to build and utilize in our model. We’re using the Train to build the model, and make predictions based on Test data. Understanding what we are predicting enables you to understand the form of data as well as the data requirements for our model. Now, take a look at the “sample_submission.csv” file in the data provided by Kaggle.

In the first stage of the Kaggle competition, you must make predictions on every possible first-round tournament matchups between every team for seasons N, O, P, Q, and R (each alphabet represents a season). Taking season N as an example, there were a total of 65 teams, so you have to make predictions for Team 1 vs. Team 2, Team 1 vs. Team 3 …. Team 1 vs. Team 65 and then Team 2 vs. Team 3, Team 2 vs. Team 4, … Team 2 vs. Team 65 and so on until every combination is listed for each season N-R.

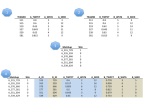

Below is a screenshot of the “sample_submissions.csv” file:

Notice how the format is SEASONLETTER_TEAMID_TEAMID for the “id” column. In the “pred” column, there will be numbers ranging from 0 to 1 representing the probability of the first TEAMID winning (the left side team).

“N_503_506” represents the season “N” and the first round matchup between team ID 503 vs. team ID 506. The “0” in the “pred” column represents the probability of team ID 503 winning (or alternatively team ID 506 losing).

Creating the Submission File

The code below is a little complicated and unnecessarily for understanding the project; it would probably be best to copy and paste now and interpret it later. What we create here is a custom function submissionFile() which creates columns in the form SEASONLETTER_TEAMID_TEAMID. We will explain how to create custom functions in more depth in our next post.

submissionFile playoffTeams numTeams matrix for(i in c(1:numTeams)) {

for(j in c(1:numTeams)) {

matrix[i,j] }

}

keep idcol for(i in c(1:numTeams)) {

for(j in c(1:numTeams)) {

if(keep[i,j] == T) {

idcol }

}

}

form return(form)

}

sub_file for(i in LETTERS[14:18]) {

sub_file }

Your First Submission!

The code below will create a file in your working directory (the Kaggle folder on your desktop) that you can submit to the competition! It’s always good to get a quick submission to get the ball rolling. Here we simply guess 50% for every possible matchup, which is the equivalent of flipping a fair-coin to predict each game! Obviously we can predict with better accuracy, especially for games such as a 1 Seed vs. a 16 Seed. That is to come later… But for now, we’ll make a random prediction to get a feel.

colnames(sub_file) sub_file$pred write.csv(sub_file, file = "sub1.csv", row.names = FALSE)

Step Three

Taking a Step Back, and Brainstorming!

This is the most creative part of data analytics and arguably the most important part. Now that you know what you are predicting, you want to think about how you want to predict it. These variables will be our predictors! Specifically how can we use the data Kaggle has given us to predict each matchup, and more broadly what are the indicators for any given team winning a game in March Madness? There is certain data that Kaggle doesn’t offer, that we may find intuitively significant or data we can create using the datasets Kaggle gives us.

- Coaches Experience

- Average Team Experience

- Wins in the Last Six Games of the Season (We can create this!)

- Shooting Percentage

The possibilities are only limited to your imaginations, especially given today’s technology in sensors and movement tracking! Its worth spending time brainstorming what indicators you think are important. Consult your basketball fanatic friends for tips. Now come back and see if you can recreate indicator variables based on what your friends advised you! We may even get to verify how truly “knowledgeable” your friends are about basketball!

Conclusion

You have successfully read in the data into RStudio and become more familiar with the data. You’ve also made your first submission! You might have a few possible variables you want to create to help with game outcome prediction. In Part II, we will work with the data and convert these variables into a data frame in RStudio. We’ll ultimately fit a machine learning model to make educated game outcome predictions! Thanks for reading!

In the mean time, checkout the Leaderboard page:

March Machine Learning Mania Leaderboard

Or go on to Part II here!

If you want more practice data projects, be sure to check out http://www.teamleada.com